|

The

AUDIENCE Auralization

System

The AUDIENCE for VR

is an integrated software

+ hardware system, composed

of a software auralization

machine and a hardware

multichannel distribution

and loudspeaker array.

The figure below shows

a functional block diagram,

expliciting the main

tasks and components

involved in a virtual

audiovisual scene auralization

process.

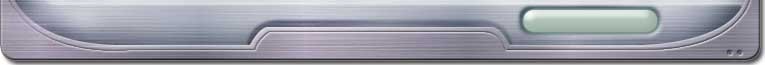

Task scenario and

main components of an

auralization system

In the highest level

of the hierarchy an

application (client)

withholds the control

of the auralization

system. In the level

immediately below we

have the navigation

function (which

controls the generation

of the audiovisual scene

for each position of

the user in the virtual

world), the descriptive

structure of data of

the audiovisual

scene, and a dynamic

data update module,

that makes the interface

with the user or with

another automatic module

updating the scene objects

dynamically.

The auralization

module is responsible

for the generation of

the audible part of

the audiovisual scene.

It is built with functional

components operating

in each functional layer

defined in the AUDIENCE

architecture.

A module is responsible

for the synchronism,

so that auralization

flows integrated to

the visual navigation.

A module allows to calibrate

the reference intensity

levels and "setup"

of the hardware and

software. Finally, the

reproduction layer will

play the final sounds,

using a player

available in the system.

In the lowest layer

we find the used computing

hardware and the

audio hardware

(the sound boards).

System General

Block Diagram

In order that the virtual

reality applications

can sound using some

auralization technique

we developed and investigated

some message passing

mechanisms through communication

protocols to allow the

control of the sound

synthesis from the virtual

reality application.

One proposal makes use

of asynchronous channels

for transmission of

commands and/or data

updates (physical parameters)

of all objects presently

in the acoustic scene,

serving the processes

that are controlling

the synthesis and the

acoustic scene auralization.

The usability and the

control of the integrated

audiovisual navigation

are basic questions

for complete immersive

virtual reality applications,

where itens as synchronization,

scene description resources

sharing, computational

load allocation, and

the interaction design

is vital and even critical

to get the desired final

effect. These requirements

of control and management

are necessary and are

foreseen in the conception

of advanced systems.

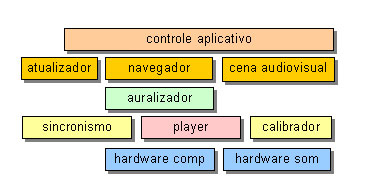

Below it is shown the

reference block diagram

for the implementation

of an Ambisonics auralization

machine, integrated

to a VR application.

An auralization engine

block diagram is shown,

integrated to a control

application (in the

case, a virtual reality

application, that can

be for example a game).

An auralization

machine block diagram

for VR

Blocks description

- VR Application:

The VR application

allows an user to

open a virtual audiovisual

scene in a given VR

platform and to navigate

and interact in this

environment. It is

a combination of softwares

having a 3D navigator

and a system of description

and setup of the audiovisual

scene (that is for

example described

using X3D), besides

the interface through

which the user will

interact. This data

delivery composing

the auditory scene

consists of functions

in the layer 1 (scene

description/composition).

- The application

commands a visualization

machine and an auralization

one. Inter-process

communication and

audiovisual synchronization

directives between

both must be guaranteed

by the global system

for the unicity of

the experience. A

parser block extracts

the scene data of

interest for the auralization

machine and sends

them (through data

passing mechanisms)

to the acoustic simulator,

responsible for the

functions in functional

layer 2.

- Sounds of the sound

sources and objects

in scene are recovered

from a data bank (e.g.

wavetables) or generated

by sound synthesis

(wavetable and/or

synth).

- The acoustic scene

modeling and rendering

takes place in the

auralization machine.

For this it is used

an acoustic environment

model, that is, one

technique to render

the sounds acoustic

propagation in the

environment. This

is a function in the

functional layer 2

for the generation

of sound fields in

the scene. Depending

on the size of the

scene or the strategy

of division of the

scene for distributed

auralization, some

auralization machine

instances are executed,

for example being

each one responsible

for the auralization

of an individual sound

source.

- The obtained sound

fields are encoded

into some adequate

spatial format, e.g.

the B-Format (1st.

order Ambisonics native

format) for its transmission

up to the reproduction

system or player.

- The spatialization

or auralization begins

with the 3D format

encoding and finishes

with the generation

of the signals for

each used loudspeaker.

- The decoding consists

on the calculation

of outpus for the

loudspeaker array.

A mixing stage to

combine soundfields

from each irradiating

sound source can be

necessary before decoding

(B-Format case) or

after.

- The reproduction

finally consists of

the amplification

and distribution of

the independent audio

channels to the output

transducers, the loudspeakers,

disposed in some output

mode (configuration)

which determines the

number of loudspeakers

and their positionings

around the listening

area. An auxiliary

system to calibrate

loudspeakers, intensities

and to equalize the

output channels is

desirable for high

levels of realism.

We use the PD (Pure

Date, from Miller Puckette)

as programming, synthesis

and sonification control

platform in the present

implementation (phase

III), and the X3D (from

the Web3D) as format

for scene description.

Another programming

and scene description

tools adequate to the

prototyping of setups

and patches in real

time would be the MAX/MSP

and the MPEG-4 Audio

BIFS format.

Software Description

An auralization machine

according to the AUDIENCE

architecture is a modular

software, having 4 layers

or main groupings of

ortogonal functionalities.

Each layer separately

executes distinct tasks

from the others, and

contains its proper

management structure.

The layers have a communication

interface that allows

that the outputs of

one be input to the

others. In this way,

the functioning of the

machine as a whole is

similar to one pipeline,

and its construction

can be oriented as software

plugins integration.

The current auralization

engines have been built

on the PD (visual programming

interface) in the form

of patches of functional

blocks. The PD offers

several advantages to

serve as basis in this

phase, due mainly to

its flexibility and

orientation to interconnectable

functional blocks (patches),

and due real time operation

facilities.

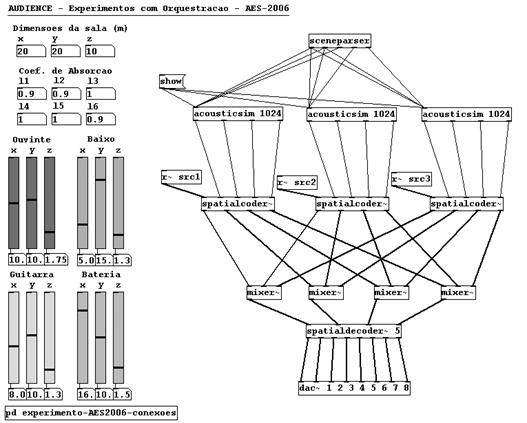

The figure below shows

the realization of an

auralization setup,

built with functional

blocks in the PD programming

plataform.

Functional blocks

of an auralization engine

built in PD

The figure shows the

blocks used to auralize

a scene with 3 musical

instruments employing

the software blocks

of the AUDIENCE system

in PD.

The room acoustic parameters

(e.g. walls absorption

coefficients, given

on the layer 1) are

informed in the input

blocks (above, to the

left). In this example,

sliders had been added

as user interface for

altering/updating the

position of the instruments

and the listener in

the scene (data from

layer 1). The "sceneparser"

block concatenates and

transmits relevant layer

1 data to the functional

blocks in layer 2, seen

below.

The layer 2 is represented

by block "acousticsim"

(acoustic simulator)

which calculates the

acoustic path from each

sound source (one for

each instrument). This

block output generates

impulsive responses

(IRs) valid for the

listener position.

The block "spatialcoder"

(right beneath, on layer

3) receives signals

(IRs) from layer 2 and

the anechoic sounds

(e.g. sound sources

wave files) to then

generate a spatial representation

of the sounds listened

in the scene (for instance,

in B-Format).

The blocks below the

"spatialcoder"

execute layer 4 functions

(mixing, decoding and

reproduction).

Besides the patches,

some special blocks

implementing specific

functions (such as ray-based

acoustic simulation

techniques) are developed

in C/C++ and then incorporated

to the AUDIENCE function

set in PD. This guarantees

freedom in the implementation

of specialized functions.

The AUDIENCE function

set and their inter-relationship

to model usage cases

is done employing UML.

Hardware Description

The AUDIENCE for Immersive

VR system hardware includes

basically:

(1) one or more processors

(e.g. PC nodes of a

cluster),

(2) one or more soundcards

or multichannel devices,

(3) one system for

audio channel distribution,

and

(4) an array of loudspeakers,

disposed in a given

geometry configuration.

Hardware for visualization

and auralization with

AUDIENCE at the Digital

Cave

Several modes or output

configurations are possible

with AUDIENCE. In our

research we have given

priority to multichannel

configurations where

loudspeakers are positioned

around the listening

area in a (regular)

specific geometry, such

as octagonal rings,

cubes (8 loudspeakers,

one per corner) and

even dodecaedrics. We

have also implemented

transcoders to (flat)

5.1 arrays, a format

much used in popular

home-theaters.

The picture below shows

a octagonal ring setup.

Regular configurations

present better acoustic

performance, uniformity

in sound field recreation

and less computational

cost to calculate outputs.

Octagonal setup

for loudspeakers

|